Technical SEO is the discipline of optimizing a website’s infrastructure so that search engines can crawl, render, index, and rank it efficiently. For business websites, technical SEO ensures that every page is accessible, fast, mobile-friendly, secure, and clearly understood by both search engines and AI-driven answer systems.

Summary

- Technical SEO determines whether your site is even eligible to rank

- Crawlability, speed, and indexation errors silently suppress growth

- Core Web Vitals influence both rankings and conversions

- Mobile-first indexing makes desktop-only optimization obsolete

- Structured data improves visibility in AI-powered search results

- Technical SEO is ongoing, not a one-time setup

Why Technical SEO Is Foundational for Business Growth

Most SEO strategies fail not because the content is weak, but because the website itself creates friction. Search engines operate at scale. They reward efficiency, clarity, and consistency. When your site introduces confusion—through broken links, slow load times, duplicate URLs, or rendering issues—your rankings erode quietly.

What makes technical SEO especially critical for businesses is scale. A single technical issue rarely affects just one page. It affects dozens, hundreds, or thousands of URLs simultaneously. That means one overlooked configuration can suppress an entire product line, service category, or blog archive.

Unlike content or links, technical SEO does not compound automatically. It must be monitored, maintained, and corrected deliberately.

What Technical SEO Actually Covers (In Practical Terms)

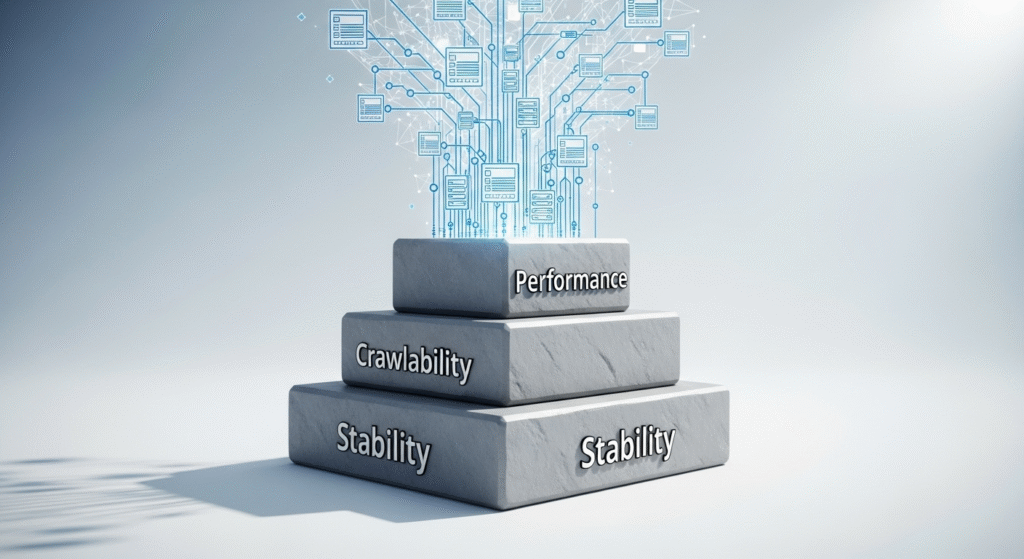

Technical SEO is not a checklist item. It is a system that governs how your website communicates with search engines.

At its core, technical SEO answers four questions:

- Can search engines access your pages reliably?

- Can they render and understand your content accurately?

- Can they determine which pages are most important?

- Can they trust your site to deliver a good user experience?

To answer those questions, technical SEO focuses on crawl access and crawl efficiency, indexation control and URL consolidation, site architecture and internal linking logic, page speed and performance signals, mobile usability and parity, security and protocol consistency, and structured data and semantic clarity.

Each area reinforces the others. Weakness in one creates drag across the entire site.

How Search Engines Crawl, Render, and Index Websites

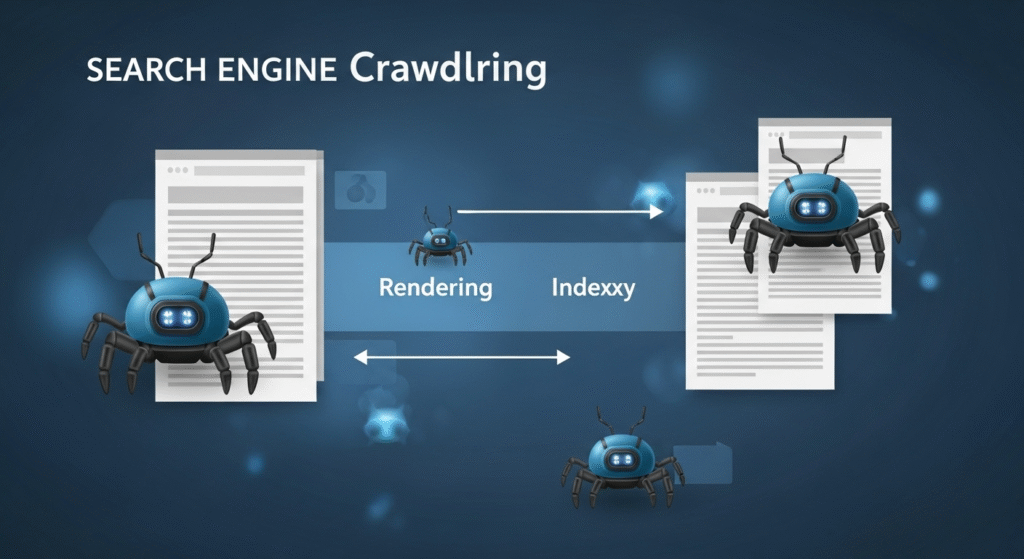

Search engines do not evaluate your site as a finished product. They experience it as a process.

First, crawlers discover URLs by following links, reading XML sitemaps, and observing external references. Once discovered, Google attempts to fetch the page’s resources, execute scripts, and render the content as a user would see it.

Only after that does Google decide whether the page should be indexed and how it relates to other pages on the site.

Business websites often break this process unintentionally. Navigation built entirely in JavaScript without crawlable fallbacks, important content loaded only after user interaction, server response delays that cause crawl throttling, and infinite URL combinations created by filters or parameters are common causes.

When crawl efficiency drops, Google does not crawl less important pages more slowly. It crawls fewer pages overall.

Crawl Budget and Why It Matters More Than You Think

Crawl budget refers to how many URLs Google is willing and able to crawl on your site within a given time frame. For small sites, this is rarely an issue. For growing business sites, it becomes critical.

Crawl budget is wasted when duplicate URLs exist without consolidation, low-value pages are indexable, redirect chains slow down discovery, and server performance is inconsistent.

Efficient crawl usage ensures that important pages are discovered faster, updated more frequently, and evaluated more accurately.

Site Architecture and Internal Linking Strategy

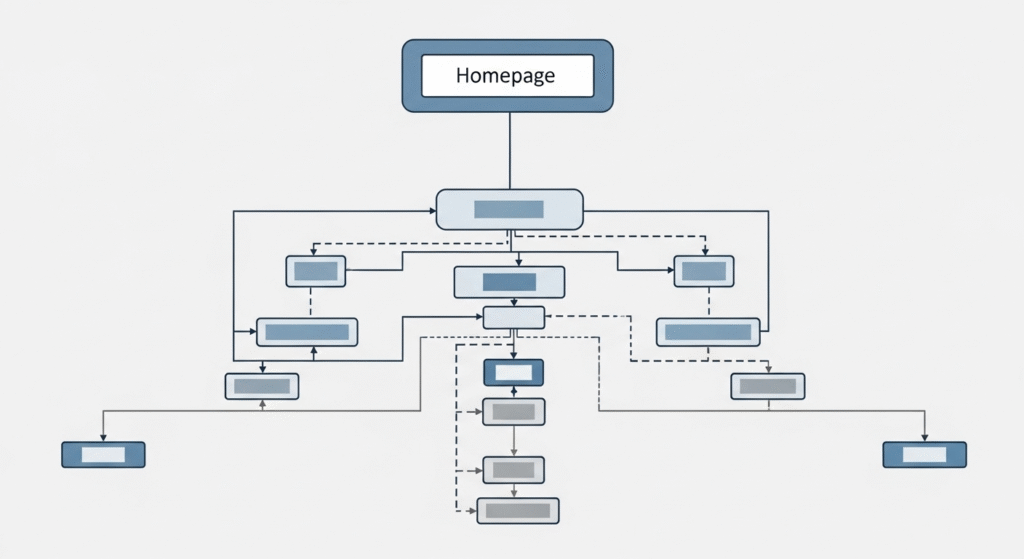

Site architecture is how pages are organized and connected. Internal linking is how authority flows through that structure.

Strong architecture communicates hierarchy, distributes link equity intentionally, and reduces crawl depth for important pages.

Business sites perform best when critical pages are reachable within two to three clicks from the homepage. Pages buried deeper lose priority and authority.

Internal links should not be random. They should reinforce topical relevance and business priorities. Linking from informational content to transactional pages helps search engines understand commercial intent and importance.

URL Structure and Consistency

URLs are not just addresses. They are signals.

Clean URLs are short and descriptive, free of unnecessary parameters, consistent in format, and aligned with site hierarchy.

Problems arise when multiple URLs serve the same content. Trailing slashes, capitalization differences, tracking parameters, and session IDs all create duplication unless controlled.

Every business website should have a single, canonical version of each page, reinforced by consistent internal linking.

Core Web Vitals and Performance Optimization

Performance is no longer a secondary concern. It is a ranking signal, a conversion signal, and a trust signal.

Core Web Vitals measure how users experience your site in the real world. Slow loading, delayed interaction, and unstable layouts all signal poor quality.

Performance issues often originate from oversized images, excessive JavaScript execution, third-party scripts, and poor server response times.

Optimizing performance is about removing friction. Every delay increases abandonment. Every shift reduces trust.

Mobile-First Indexing and Content Parity

Google indexes and ranks your site primarily based on its mobile version. That means mobile content is not supplemental. It is authoritative.

If content exists on desktop but not on mobile, Google treats it as missing. If internal links are present on desktop but removed on mobile, authority flow is disrupted.

Mobile optimization requires responsive design, identical primary content across devices, consistent internal linking, and fast, stable interaction on touch devices.

Indexation Control and Signal Alignment

Search engines rely on explicit instructions. When signals conflict, they default to caution.

Indexation is controlled through robots.txt directives, meta robots tags, canonical tags, and HTTP status codes.

Each important page should be crawlable, indexable, canonicalized correctly, and return a clean 200 status code.

Anything else introduces ambiguity.

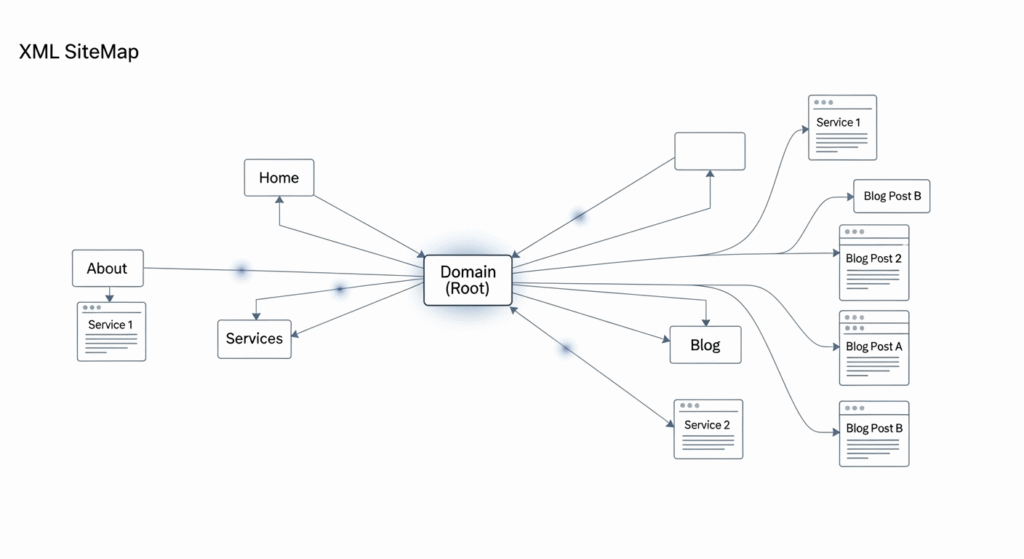

XML Sitemaps and Discovery Efficiency

XML sitemaps help search engines prioritize crawling. They do not override architecture, but they support it.

Effective sitemaps include only canonical, indexable URLs, exclude duplicates and low-value pages, and update automatically as content changes.

Submitting sitemaps through Google Search Console improves discovery, especially for large or frequently updated sites.

Structured Data and Semantic Understanding

Structured data translates your content into a language search engines and AI systems understand precisely.

Schema markup clarifies page type, entity relationships, questions and answers, and products or services.

In AI-powered search environments, clarity beats inference. Pages with explicit semantic signals are easier to trust, parse, and surface.

Duplicate Content and Canonical Strategy

Duplicate content is rarely malicious. It is usually structural.

Filtered URLs, pagination, tracking parameters, and multiple category paths often create duplication.

Canonical tags, consistent internal linking, and parameter management ensure one version remains authoritative.

HTTPS, Security, and Trust Signals

Security is a baseline expectation. HTTPS is mandatory.

Mixed content, expired certificates, and insecure resource loading erode trust signals. Secure configuration must be enforced site-wide.

JavaScript, Rendering, and Modern Frameworks

Search engines can render JavaScript, but not without cost.

Rendering delays slow indexation. Heavy scripts reduce crawl efficiency. Poor implementations hide content until interaction.

Server-side or hybrid rendering, accessible content, and crawlable navigation reduce risk.

Technical SEO Audits and Ongoing Maintenance

Technical SEO degrades over time.

Audits should be performed quarterly, after redesigns, and after major CMS or plugin updates.

Audits should evaluate crawl data, index coverage, performance, internal linking, structured data, and AI visibility readiness.

Technical SEO vs On-Page SEO

Technical SEO enables access and understanding. On-page SEO drives relevance and intent matching.

They overlap in internal linking, page experience, and structured data.

Strong rankings require both.

Real-World Business Impact

When technical SEO works, it is invisible. When it fails, growth stalls.

Businesses with strong technical foundations see faster indexation, more stable rankings, higher engagement, and better AI-search visibility.

Practical Technical SEO Checklist for Business Websites

Crawlability: Ensure important pages are accessible and error-free

Indexation: Align robots, canonicals, and meta tags

Architecture: Keep key pages within three clicks

Performance: Optimize loading, interaction, and stability

Mobile: Maintain full content parity

Schema: Implement structured data consistently

Security: Enforce HTTPS everywhere

What This Means for Business Owners

Technical SEO multiplies the return on every other SEO investment. Without it, growth is fragile. With it, performance compounds.

If organic search matters to your business, technical SEO is infrastructure.

FAQ’s

What is technical SEO in simple terms?

Technical SEO ensures your website functions correctly for search engines by improving speed, structure, and indexation so your content can rank.

Is technical SEO still important with AI search results?

Yes. AI Overviews rely on structured, crawlable, trustworthy data—technical SEO enables that.

How long does technical SEO take to show results?

Critical fixes can impact performance within weeks, especially when crawl or speed issues are resolved.

Do small businesses really need technical SEO?

Yes. Smaller sites often see faster gains because improvements affect the entire site.